Free Statistics

of Irreproducible Research!

Description of Statistical Computation | |||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Author's title | |||||||||||||||||||||||||||||||||||||||||||||

| Author | *The author of this computation has been verified* | ||||||||||||||||||||||||||||||||||||||||||||

| R Software Module | rwasp_regression_trees1.wasp | ||||||||||||||||||||||||||||||||||||||||||||

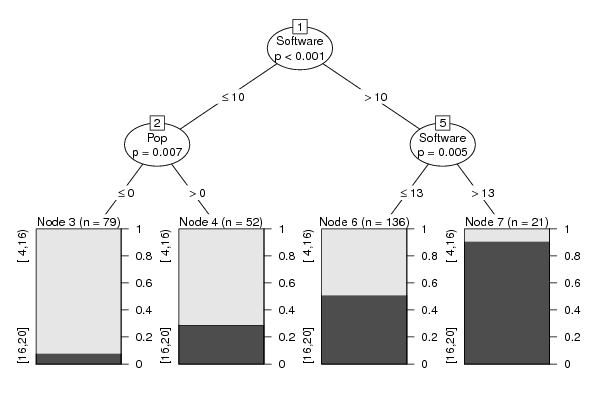

| Title produced by software | Recursive Partitioning (Regression Trees) | ||||||||||||||||||||||||||||||||||||||||||||

| Date of computation | Sun, 05 Dec 2010 19:35:21 +0000 | ||||||||||||||||||||||||||||||||||||||||||||

| Cite this page as follows | Statistical Computations at FreeStatistics.org, Office for Research Development and Education, URL https://freestatistics.org/blog/index.php?v=date/2010/Dec/05/t1291577663x9aa8uvx2sbuy18.htm/, Retrieved Fri, 05 Dec 2025 08:02:35 +0000 | ||||||||||||||||||||||||||||||||||||||||||||

| Statistical Computations at FreeStatistics.org, Office for Research Development and Education, URL https://freestatistics.org/blog/index.php?pk=105479, Retrieved Fri, 05 Dec 2025 08:02:35 +0000 | |||||||||||||||||||||||||||||||||||||||||||||

| QR Codes: | |||||||||||||||||||||||||||||||||||||||||||||

|

| |||||||||||||||||||||||||||||||||||||||||||||

| Original text written by user: | |||||||||||||||||||||||||||||||||||||||||||||

| IsPrivate? | No (this computation is public) | ||||||||||||||||||||||||||||||||||||||||||||

| User-defined keywords | |||||||||||||||||||||||||||||||||||||||||||||

| Estimated Impact | 966 | ||||||||||||||||||||||||||||||||||||||||||||

Tree of Dependent Computations | |||||||||||||||||||||||||||||||||||||||||||||

| Family? (F = Feedback message, R = changed R code, M = changed R Module, P = changed Parameters, D = changed Data) | |||||||||||||||||||||||||||||||||||||||||||||

| - [Recursive Partitioning (Regression Trees)] [] [2010-12-05 19:35:21] [d76b387543b13b5e3afd8ff9e5fdc89f] [Current] - PD [Recursive Partitioning (Regression Trees)] [WS10 RP DMA] [2010-12-09 17:24:18] [2099aacba481f75a7f949aa310cab952] - R PD [Recursive Partitioning (Regression Trees)] [recursive partiti...] [2010-12-09 21:27:00] [97ad38b1c3b35a5feca8b85f7bc7b3ff] - P [Recursive Partitioning (Regression Trees)] [Recursive partiti...] [2010-12-09 21:41:03] [97ad38b1c3b35a5feca8b85f7bc7b3ff] - R PD [Recursive Partitioning (Regression Trees)] [] [2011-12-11 11:24:23] [06c08141d7d783218a8164fd2ea166f2] - R P [Recursive Partitioning (Regression Trees)] [Cross Validation] [2011-12-13 11:01:21] [d1ce18d003fa52f731d1c3ce8b58d5f9] - PD [Recursive Partitioning (Regression Trees)] [] [2011-12-20 21:27:44] [f1de53e71fac758e9834be8effee591f] - R PD [Recursive Partitioning (Regression Trees)] [] [2011-12-12 18:28:46] [ec2187f7727da5d5d939740b21b8b68a] - R [Recursive Partitioning (Regression Trees)] [WS10-Cross valida...] [2011-12-13 13:58:17] [69d59b79aaf660457acc70a0ef0bfdab] - R [Recursive Partitioning (Regression Trees)] [] [2011-12-13 18:04:19] [9401a40688cf36283be626153bc5a38b] - R PD [Recursive Partitioning (Regression Trees)] [] [2011-12-11 11:02:45] [06c08141d7d783218a8164fd2ea166f2] - R [Recursive Partitioning (Regression Trees)] [] [2011-12-12 17:55:35] [ec2187f7727da5d5d939740b21b8b68a] - R [Recursive Partitioning (Regression Trees)] [Recursive Partiti...] [2011-12-13 10:43:17] [d1ce18d003fa52f731d1c3ce8b58d5f9] - R [Recursive Partitioning (Regression Trees)] [WS10-Recursive Pa...] [2011-12-13 13:32:58] [69d59b79aaf660457acc70a0ef0bfdab] - R [Recursive Partitioning (Regression Trees)] [] [2011-12-13 17:35:19] [9401a40688cf36283be626153bc5a38b] - PD [Recursive Partitioning (Regression Trees)] [Recursive partiti...] [2010-12-10 10:17:52] [8a9a6f7c332640af31ddca253a8ded58] - P [Recursive Partitioning (Regression Trees)] [3 categorien] [2010-12-17 09:49:31] [8a9a6f7c332640af31ddca253a8ded58] - P [Recursive Partitioning (Regression Trees)] [2 categorieen] [2010-12-18 21:49:25] [74deae64b71f9d77c839af86f7c687b5] - P [Recursive Partitioning (Regression Trees)] [3 categorieen] [2010-12-18 21:58:20] [74deae64b71f9d77c839af86f7c687b5] - PD [Recursive Partitioning (Regression Trees)] [Recursive Partiti...] [2010-12-10 10:59:38] [74deae64b71f9d77c839af86f7c687b5] - PD [Recursive Partitioning (Regression Trees)] [W10 RP Cat] [2010-12-10 12:10:27] [56d90b683fcd93137645f9226b43c62b] F PD [Recursive Partitioning (Regression Trees)] [W10 RP Cat] [2010-12-10 12:16:11] [56d90b683fcd93137645f9226b43c62b] - PD [Recursive Partitioning (Regression Trees)] [Brutoloonindex] [2010-12-10 12:54:47] [13c73ac943380855a1c72833078e44d2] - P [Recursive Partitioning (Regression Trees)] [Brutoloonindex] [2010-12-10 12:56:41] [3074aa973ede76ac75d398946b01602f] - PD [Recursive Partitioning (Regression Trees)] [workshop 10 - rec...] [2010-12-10 13:19:45] [956e8df26b41c50d9c6c2ec1b6a122a8] - R PD [Recursive Partitioning (Regression Trees)] [ws 10 rp 2 classes] [2010-12-10 13:27:18] [4eaa304e6a28c475ba490fccf4c01ad3] - PD [Recursive Partitioning (Regression Trees)] [Workshop 10 - Rec...] [2010-12-10 13:26:07] [6f0e7a2d1a07390e3505a2db8288f975] - R PD [Recursive Partitioning (Regression Trees)] [Workshop 10 - PE ...] [2010-12-10 13:33:05] [8b017ffbf7b0eded54d8efebfb3e4cfa] - PD [Recursive Partitioning (Regression Trees)] [WS10] [2010-12-10 13:53:17] [c7506ced21a6c0dca45d37c8a93c80e0] - P [Recursive Partitioning (Regression Trees)] [WS10 - RP (catego...] [2010-12-10 14:58:20] [4a7069087cf9e0eda253aeed7d8c30d6] F P [Recursive Partitioning (Regression Trees)] [w10] [2010-12-14 09:13:07] [247f085ab5b7724f755ad01dc754a3e8] - PD [Recursive Partitioning (Regression Trees)] [WS10] [2010-12-10 14:09:52] [c7506ced21a6c0dca45d37c8a93c80e0] - P [Recursive Partitioning (Regression Trees)] [WS10 - Crossvalid...] [2010-12-10 15:01:44] [4a7069087cf9e0eda253aeed7d8c30d6] F P [Recursive Partitioning (Regression Trees)] [w10] [2010-12-14 09:14:24] [247f085ab5b7724f755ad01dc754a3e8] - PD [Recursive Partitioning (Regression Trees)] [Recursive Partici...] [2010-12-10 14:12:47] [9894f466352df31a128e82ec8d720241] - PD [Recursive Partitioning (Regression Trees)] [Recursive Partici...] [2010-12-10 14:18:44] [9894f466352df31a128e82ec8d720241] - PD [Recursive Partitioning (Regression Trees)] [Recursive Partiti...] [2010-12-10 21:32:17] [1429a1a14191a86916b95357f6de790b] F [Recursive Partitioning (Regression Trees)] [Recursive Partiti...] [2010-12-10 21:40:26] [1429a1a14191a86916b95357f6de790b] - PD [Recursive Partitioning (Regression Trees)] [] [2011-12-13 18:32:30] [e21b9c93af4eb9605ecfaf58a559e5ab] - RMP [Recursive Partitioning (Regression Trees)] [] [2011-12-13 20:18:05] [74be16979710d4c4e7c6647856088456] - PD [Recursive Partitioning (Regression Trees)] [WS 10 Recursive P...] [2010-12-11 14:37:37] [8081b8996d5947580de3eb171e82db4f] - PD [Recursive Partitioning (Regression Trees)] [WS 10 Recursive P...] [2010-12-11 14:49:21] [8081b8996d5947580de3eb171e82db4f] - R PD [Recursive Partitioning (Regression Trees)] [W10-Recursive Par...] [2010-12-11 15:29:12] [48146708a479232c43a8f6e52fbf83b4] - PD [Recursive Partitioning (Regression Trees)] [W10-test gender e...] [2010-12-11 16:35:35] [48146708a479232c43a8f6e52fbf83b4] - PD [Recursive Partitioning (Regression Trees)] [recursive partiti...] [2010-12-12 08:36:21] [95e8426e0df851c9330605aa1e892ab5] - P [Recursive Partitioning (Regression Trees)] [Cross validation ...] [2010-12-12 08:49:43] [95e8426e0df851c9330605aa1e892ab5] - PD [Recursive Partitioning (Regression Trees)] [workshop 10 matrice] [2010-12-12 10:24:22] [6ff9fb24bdca608d2f4f1f9db3f6445e] - P [Recursive Partitioning (Regression Trees)] [paper - recursiev...] [2010-12-16 10:38:27] [6ff9fb24bdca608d2f4f1f9db3f6445e] - PD [Recursive Partitioning (Regression Trees)] [WS 10 RP 2 klassen] [2010-12-12 12:52:37] [49c7a512c56172bc46ae7e93e5b58c1c] [Truncated] | |||||||||||||||||||||||||||||||||||||||||||||

| Feedback Forum | |||||||||||||||||||||||||||||||||||||||||||||

Post a new message | |||||||||||||||||||||||||||||||||||||||||||||

Dataset | |||||||||||||||||||||||||||||||||||||||||||||

| Dataseries X: | |||||||||||||||||||||||||||||||||||||||||||||

1 1 41 38 13 12 14 1 1 39 32 16 11 18 1 1 30 35 19 15 11 1 0 31 33 15 6 12 1 1 34 37 14 13 16 1 1 35 29 13 10 18 1 1 39 31 19 12 14 1 1 34 36 15 14 14 1 1 36 35 14 12 15 1 1 37 38 15 9 15 1 0 38 31 16 10 17 1 1 36 34 16 12 19 1 0 38 35 16 12 10 1 1 39 38 16 11 16 1 1 33 37 17 15 18 1 0 32 33 15 12 14 1 0 36 32 15 10 14 1 1 38 38 20 12 17 1 0 39 38 18 11 14 1 1 32 32 16 12 16 1 0 32 33 16 11 18 1 1 31 31 16 12 11 1 1 39 38 19 13 14 1 1 37 39 16 11 12 1 0 39 32 17 12 17 1 1 41 32 17 13 9 1 0 36 35 16 10 16 1 1 33 37 15 14 14 1 1 33 33 16 12 15 1 0 34 33 14 10 11 1 1 31 31 15 12 16 1 0 27 32 12 8 13 1 1 37 31 14 10 17 1 1 34 37 16 12 15 1 0 34 30 14 12 14 1 0 32 33 10 7 16 1 0 29 31 10 9 9 1 0 36 33 14 12 15 1 1 29 31 16 10 17 1 0 35 33 16 10 13 1 0 37 32 16 10 15 1 1 34 33 14 12 16 1 0 38 32 20 15 16 1 0 35 33 14 10 12 1 1 38 28 14 10 15 1 1 37 35 11 12 11 1 1 38 39 14 13 15 1 1 33 34 15 11 15 1 1 36 38 16 11 17 1 0 38 32 14 12 13 1 1 32 38 16 14 16 1 0 32 30 14 10 14 1 0 32 33 12 12 11 1 1 34 38 16 13 12 1 0 32 32 9 5 12 1 1 37 35 14 6 15 1 1 39 34 16 12 16 1 1 29 34 16 12 15 1 0 37 36 15 11 12 1 1 35 34 16 10 12 1 0 30 28 12 7 8 1 0 38 34 16 12 13 1 1 34 35 16 14 11 1 1 31 35 14 11 14 1 1 34 31 16 12 15 1 0 35 37 17 13 10 1 1 36 35 18 14 11 1 0 30 27 18 11 12 1 1 39 40 12 12 15 1 0 35 37 16 12 15 1 0 38 36 10 8 14 1 1 31 38 14 11 16 1 1 34 39 18 14 15 1 0 38 41 18 14 15 1 0 34 27 16 12 13 1 1 39 30 17 9 12 1 1 37 37 16 13 17 1 1 34 31 16 11 13 1 0 28 31 13 12 15 1 0 37 27 16 12 13 1 0 33 36 16 12 15 1 1 35 37 16 12 15 1 0 37 33 15 12 16 1 1 32 34 15 11 15 1 1 33 31 16 10 14 1 0 38 39 14 9 15 1 1 33 34 16 12 14 1 1 29 32 16 12 13 1 1 33 33 15 12 7 1 1 31 36 12 9 17 1 1 36 32 17 15 13 1 1 35 41 16 12 15 1 1 32 28 15 12 14 1 1 29 30 13 12 13 1 1 39 36 16 10 16 1 1 37 35 16 13 12 1 1 35 31 16 9 14 1 0 37 34 16 12 17 1 0 32 36 14 10 15 1 1 38 36 16 14 17 1 0 37 35 16 11 12 1 1 36 37 20 15 16 1 0 32 28 15 11 11 1 1 33 39 16 11 15 1 0 40 32 13 12 9 1 1 38 35 17 12 16 1 0 41 39 16 12 15 1 0 36 35 16 11 10 1 1 43 42 12 7 10 1 1 30 34 16 12 15 1 1 31 33 16 14 11 1 1 32 41 17 11 13 1 1 37 34 12 10 18 1 0 37 32 18 13 16 1 1 33 40 14 13 14 1 1 34 40 14 8 14 1 1 33 35 13 11 14 1 1 38 36 16 12 14 1 0 33 37 13 11 12 1 1 31 27 16 13 14 1 1 38 39 13 12 15 1 1 37 38 16 14 15 1 1 36 31 15 13 15 1 1 31 33 16 15 13 1 0 39 32 15 10 17 1 1 44 39 17 11 17 1 1 33 36 15 9 19 1 1 35 33 12 11 15 1 0 32 33 16 10 13 1 0 28 32 10 11 9 1 1 40 37 16 8 15 1 0 27 30 12 11 15 1 0 37 38 14 12 15 1 1 32 29 15 12 16 1 0 28 22 13 9 11 1 0 34 35 15 11 14 1 1 30 35 11 10 11 1 1 35 34 12 8 15 1 0 31 35 11 9 13 1 1 32 34 16 8 15 1 0 30 37 15 9 16 1 1 30 35 17 15 14 1 0 31 23 16 11 15 1 1 40 31 10 8 16 1 1 32 27 18 13 16 1 0 36 36 13 12 11 1 0 32 31 16 12 12 1 0 35 32 13 9 9 1 1 38 39 10 7 16 1 1 42 37 15 13 13 1 0 34 38 16 9 16 1 1 35 39 16 6 12 1 1 38 34 14 8 9 1 1 33 31 10 8 13 1 1 32 37 13 6 14 1 1 33 36 15 9 19 1 1 34 32 16 11 13 1 1 32 38 12 8 12 0 0 27 26 13 10 10 0 0 31 26 12 8 14 0 0 38 33 17 14 16 0 1 34 39 15 10 10 0 0 24 30 10 8 11 0 0 30 33 14 11 14 0 1 26 25 11 12 12 0 1 34 38 13 12 9 0 0 27 37 16 12 9 0 0 37 31 12 5 11 0 1 36 37 16 12 16 0 0 41 35 12 10 9 0 1 29 25 9 7 13 0 1 36 28 12 12 16 0 0 32 35 15 11 13 0 1 37 33 12 8 9 0 0 30 30 12 9 12 0 1 31 31 14 10 16 0 1 38 37 12 9 11 0 1 36 36 16 12 14 0 0 35 30 11 6 13 0 0 31 36 19 15 15 0 0 38 32 15 12 14 0 1 22 28 8 12 16 0 1 32 36 16 12 13 0 0 36 34 17 11 14 0 1 39 31 12 7 15 0 0 28 28 11 7 13 0 0 32 36 11 5 11 0 1 32 36 14 12 11 0 1 38 40 16 12 14 0 1 32 33 12 3 15 0 1 35 37 16 11 11 0 1 32 32 13 10 15 0 0 37 38 15 12 12 0 1 34 31 16 9 14 0 1 33 37 16 12 14 0 0 33 33 14 9 8 0 0 30 30 16 12 9 0 0 24 30 14 10 15 0 0 34 31 11 9 17 0 0 34 32 12 12 13 0 1 33 34 15 8 15 0 1 34 36 15 11 15 0 1 35 37 16 11 14 0 0 35 36 16 12 16 0 0 36 33 11 10 13 0 0 34 33 15 10 16 0 1 34 33 12 12 9 0 0 41 44 12 12 16 0 0 32 39 15 11 11 0 0 30 32 15 8 10 0 1 35 35 16 12 11 0 0 28 25 14 10 15 0 1 33 35 17 11 17 0 1 39 34 14 10 14 0 0 36 35 13 8 8 0 1 36 39 15 12 15 0 0 35 33 13 12 11 0 0 38 36 14 10 16 0 1 33 32 15 12 10 0 0 31 32 12 9 15 0 1 32 36 8 6 16 0 0 31 32 14 10 19 0 0 33 34 14 9 12 0 0 34 33 11 9 8 0 0 34 35 12 9 11 0 1 34 30 13 6 14 0 0 33 38 10 10 9 0 0 32 34 16 6 15 0 1 41 33 18 14 13 0 1 34 32 13 10 16 0 0 36 31 11 10 11 0 0 37 30 4 6 12 0 0 36 27 13 12 13 0 1 29 31 16 12 10 0 0 37 30 10 7 11 0 0 27 32 12 8 12 0 0 35 35 12 11 8 0 0 28 28 10 3 12 0 0 35 33 13 6 12 0 0 29 35 12 8 11 0 0 32 35 14 9 13 0 1 36 32 10 9 14 0 1 19 21 12 8 10 0 1 21 20 12 9 12 0 0 31 34 11 7 15 0 0 33 32 10 7 13 0 1 36 34 12 6 13 0 1 33 32 16 9 13 0 0 37 33 12 10 12 0 0 34 33 14 11 12 0 0 35 37 16 12 9 0 1 31 32 14 8 9 0 1 37 34 13 11 15 0 1 35 30 4 3 10 0 1 27 30 15 11 14 0 0 34 38 11 12 15 0 0 40 36 11 7 7 0 0 29 32 14 9 14 0 0 38 34 15 12 8 0 1 34 33 14 8 10 0 0 21 27 13 11 13 0 0 36 32 11 8 13 0 1 38 34 15 10 13 0 0 30 29 11 8 8 0 0 35 35 13 7 12 0 1 30 27 13 8 13 0 1 36 33 16 10 12 0 0 34 38 13 8 10 0 1 35 36 16 12 13 0 0 34 33 16 14 12 0 0 32 39 12 7 9 0 1 33 29 7 6 15 0 0 33 32 16 11 13 0 1 26 34 5 4 13 0 0 35 38 16 9 13 0 0 21 17 4 5 15 0 0 38 35 12 9 15 0 0 35 32 15 11 14 0 1 33 34 14 12 15 0 0 37 36 11 9 11 0 0 38 31 16 12 15 0 1 34 35 15 10 14 0 0 27 29 12 9 13 0 1 16 22 6 6 12 0 0 40 41 16 10 16 0 0 36 36 10 9 16 0 1 42 42 15 13 9 0 1 30 33 14 12 14 | |||||||||||||||||||||||||||||||||||||||||||||

Tables (Output of Computation) | |||||||||||||||||||||||||||||||||||||||||||||

| |||||||||||||||||||||||||||||||||||||||||||||

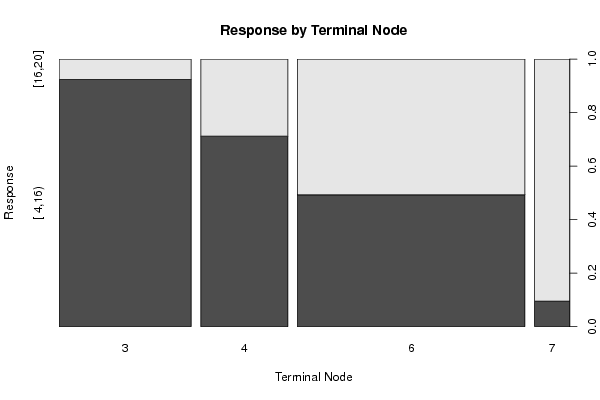

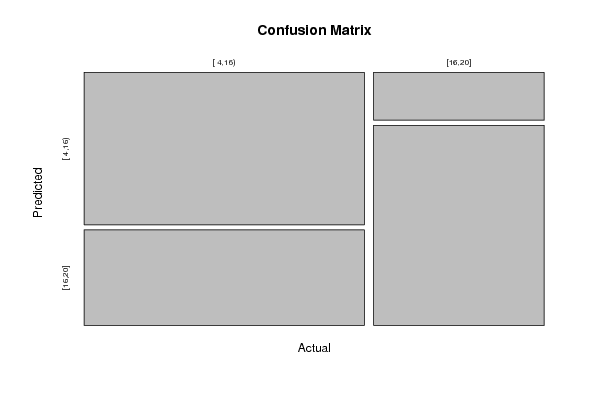

Figures (Output of Computation) | |||||||||||||||||||||||||||||||||||||||||||||

Input Parameters & R Code | |||||||||||||||||||||||||||||||||||||||||||||

| Parameters (Session): | |||||||||||||||||||||||||||||||||||||||||||||

| par1 = 3 ; par2 = equal ; par3 = 2 ; par4 = no ; | |||||||||||||||||||||||||||||||||||||||||||||

| Parameters (R input): | |||||||||||||||||||||||||||||||||||||||||||||

| par1 = 5 ; par2 = quantiles ; par3 = 2 ; par4 = no ; | |||||||||||||||||||||||||||||||||||||||||||||

| R code (references can be found in the software module): | |||||||||||||||||||||||||||||||||||||||||||||

library(party) | |||||||||||||||||||||||||||||||||||||||||||||