panel.tau <- function(x, y, digits=2, prefix='', cex.cor)

{

usr <- par('usr'); on.exit(par(usr))

par(usr = c(0, 1, 0, 1))

rr <- cor.test(x, y, method=par1)

r <- round(rr$p.value,2)

txt <- format(c(r, 0.123456789), digits=digits)[1]

txt <- paste(prefix, txt, sep='')

if(missing(cex.cor)) cex <- 0.5/strwidth(txt)

text(0.5, 0.5, txt, cex = cex)

}

panel.hist <- function(x, ...)

{

usr <- par('usr'); on.exit(par(usr))

par(usr = c(usr[1:2], 0, 1.5) )

h <- hist(x, plot = FALSE)

breaks <- h$breaks; nB <- length(breaks)

y <- h$counts; y <- y/max(y)

rect(breaks[-nB], 0, breaks[-1], y, col='grey', ...)

}

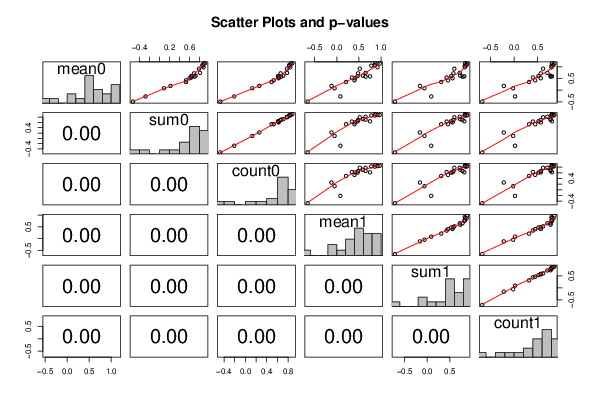

bitmap(file='test1.png')

pairs(t(y),diag.panel=panel.hist, upper.panel=panel.smooth, lower.panel=panel.tau, main=main)

dev.off()

load(file='createtable')

n <- length(y[,1])

n

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,paste('Correlations for all pairs of data series (method=',par1,')',sep=''),n+1,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,' ',header=TRUE)

for (i in 1:n) {

a<-table.element(a,dimnames(t(x))[[2]][i],header=TRUE)

}

a<-table.row.end(a)

for (i in 1:n) {

a<-table.row.start(a)

a<-table.element(a,dimnames(t(x))[[2]][i],header=TRUE)

for (j in 1:n) {

r <- cor.test(y[i,],y[j,],method=par1)

a<-table.element(a,round(r$estimate,3))

}

a<-table.row.end(a)

}

a<-table.end(a)

table.save(a,file='mytable.tab')

ncorrs <- (n*n -n)/2

mycorrs <- array(0, dim=c(10,3))

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,'Correlations for all pairs of data series with p-values',4,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'pair',1,TRUE)

a<-table.element(a,'Pearson r',1,TRUE)

a<-table.element(a,'Spearman rho',1,TRUE)

a<-table.element(a,'Kendall tau',1,TRUE)

a<-table.row.end(a)

cor.test(y[1,],y[2,],method=par1)

for (i in 1:(n-1))

{

for (j in (i+1):n)

{

a<-table.row.start(a)

dum <- paste(dimnames(t(x))[[2]][i],';',dimnames(t(x))[[2]][j],sep='')

a<-table.element(a,dum,header=TRUE)

rp <- cor.test(y[i,],y[j,],method='pearson')

a<-table.element(a,round(rp$estimate,4))

rs <- cor.test(y[i,],y[j,],method='spearman')

a<-table.element(a,round(rs$estimate,4))

rk <- cor.test(y[i,],y[j,],method='kendall')

a<-table.element(a,round(rk$estimate,4))

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'p-value',header=T)

a<-table.element(a,paste('(',round(rp$p.value,4),')',sep=''))

a<-table.element(a,paste('(',round(rs$p.value,4),')',sep=''))

a<-table.element(a,paste('(',round(rk$p.value,4),')',sep=''))

a<-table.row.end(a)

for (iii in 1:10) {

iiid100 <- iii / 100

if (rp$p.value < iiid100) mycorrs[iii, 1] = mycorrs[iii, 1] + 1

if (rs$p.value < iiid100) mycorrs[iii, 2] = mycorrs[iii, 2] + 1

if (rk$p.value < iiid100) mycorrs[iii, 3] = mycorrs[iii, 3] + 1

}

}

}

a<-table.end(a)

table.save(a,file='mytable1.tab')

a<-table.start()

a<-table.row.start(a)

a<-table.element(a,'Meta Analysis of Correlation Tests',4,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'Number of significant by total number of Correlations',4,TRUE)

a<-table.row.end(a)

a<-table.row.start(a)

a<-table.element(a,'Type I error',1,TRUE)

a<-table.element(a,'Pearson r',1,TRUE)

a<-table.element(a,'Spearman rho',1,TRUE)

a<-table.element(a,'Kendall tau',1,TRUE)

a<-table.row.end(a)

for (iii in 1:10) {

iiid100 <- iii / 100

a<-table.row.start(a)

a<-table.element(a,round(iiid100,2),header=T)

a<-table.element(a,round(mycorrs[iii,1]/ncorrs,2))

a<-table.element(a,round(mycorrs[iii,2]/ncorrs,2))

a<-table.element(a,round(mycorrs[iii,3]/ncorrs,2))

a<-table.row.end(a)

}

a<-table.end(a)

table.save(a,file='mytable2.tab')

|